Project Goal

Many television broadcasts often require personally-identifiable visual information to be obfuscated to preserve anonymity (faces, license plates, addresses, etc.). This is especially true when video footage includes minors or witnesses. To do so for a stationary interview is simple. However, if motion relative to the camera is involved, a painstaking human post-processing step is required before broadcast. This results in a significant delay and adversely affects the user experience.These features are often present at the start of broadcast, and can be easily targeted by a camera-person before recording/broadcast begins. Our goal is to create a proof-of-concept of an interface that will allow a cameraman to identify a feature in view (such as an individual's face) and mark it for automatic obfuscation in the subsequent live video recording. The system will track the feature automatically as it moves, concealing it via blurring or image overlay.

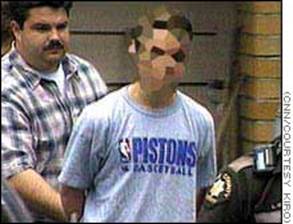

Figure 1: Pixilated image of a 13-year-old murder suspect turning himself in to the police (the youth's face has been obscured because he is a juvenile)

Project Scope

This project involves providing an interface that enables a user to target an object based on a scaleable region in the center of image space. Once the region is selected, a template key is created (such as with SIFT features, color histograms, Gabor bunch graphs) and it is tracked and/or found in following frames. This will allow the camera to pan away and then back, or allow the subject to turn around or leave the frame. The subject is obfuscated with an overlay.This would be implemented on a desktop/laptop computer, but the algorithms could eventually be implemented in real-time directly on camera hardware.

Tasks

- Task 1: Download the SIFT papers zip file (7M) and read all of the papers

- Task 2: Download run and understand Matlab SIFT code (0.3M) (it doesn't include recognition keys).

- Task 3: Apply create a template key for the target region, using methods such as SIFT features, color histograms, and/or Gabor bunch graphs.

- Task 4: Implement a recognition scheme such as Lowe's affine warped Hough transform.

- Task 5: Identify catalogued SIFT features in subsequent frames and/or track the region.

- Task 6: Create mouse interface to identify target region.

- Task 7: Lower the SIFT detection threshold and divide the Difference of Gaussian features by Sum of Gaussians for greater lighting independence.

Project Status

Sam Z. Glassenberg, Samuel M. Pearlman, and David Lowsky.

Point of Contact

Sebastian Thrun

Midterm Report

submitted

Final Report

submitted