Project Goal

This project seeks to develop techniques for stitching together 3-D images of the type shown below. We already have a capability to acquire such textured range images. We also have good position estimates of the location at which such a range image was taken. In this project, we will develop techniques to seamlessly integrate large numbers of such images to build a 3-D model of a building interior.

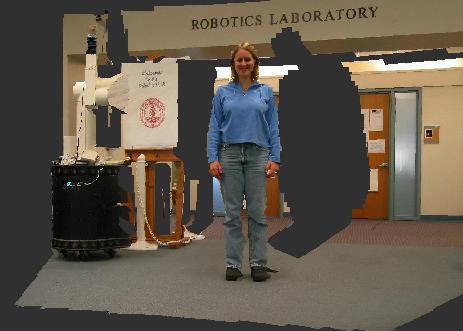

Figure 1: 3-D Image acquired by the Lab's Autonomous Segbot robot

Project Scope

This project involves gathering a number of 3-D range/texture images, and recording them with the initial pose estimate from the robotic vehicle. The key questions will be: How can we further adjust the pose estimate to make the images match, and how can we accommodate coloration effects so that image boundaries become invisible in the final map.

Tasks

The project will be accomplished through the following tasks.You will be working closely with the Stanford Robot Learning Lab, which will provide the necessary infrastructure to collect the data and evaluate your results.

- Task 1: Collecting Data (we will help!)

- Task 2: Establishing correspondences in 3-D image space for alignment. Here we anticipate extracting SIFT features from these images, and aligning images by minimizing the distance between corresponding features.

- Task 3: Integrate texture images into a single 3-D model.

- Task 4: (if needed) Warp the images to improve their consistency.

Project Status

all slots still available

Point of Contact

Mike Montemerlo and Sebastian Thrun

Midterm Report

not yet submitted