Project Goal

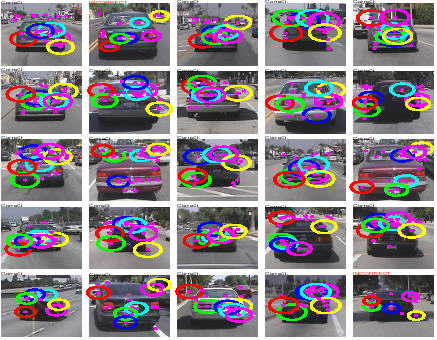

We'll provide you with a camera that has Pan Tilt Zoom (PTZ) controls so that it can actively "look" around in the world. The camera will be placed in a window and pan, tilt and zoom around to create a Scale Invariant Feature Transform (SIFT feature) "model" of the space it's looking at, and associate this model with pitch, swivel and zoom parameters. The features will be learned with a Gaussian model similar to that done in Fergus et al** to account for movements of trees, changing illumination etc and to wash out objects that aren't fixed to the scene such as people or cars moving through. Once this model is learned, it will be used to do background SIFT subtraction leaving only new objects such as people and cars. These moving objects will then be learned by the method of Fergus et al** (but modified to use SIFT). What we'll end up with is a flexible security type of system that can learn it's static environment and use that to learn the moving things that it sees in it as depicted in Figure 1.

Figure 1: Finding cars in a scene, ignoring background.

Project Scope

Will use the existing Matlab SIFT code developed at Intel as a basis for feature finding, recognition keys will have to be added to the features found by this code. A pan-tilt-zoom (PTZ) camera will be supplied, hopefully with a decent control and readout interface established. Fergus, Perona and Zisserman's method will be modified to use SIFT features and serve as a basis for learning the scene model and then further used for learning moving objects that enter and exit the scene. Except for experimentation, the implementation of this project probably has to be in C or C++ unless you can control and get data back from the camera in Matlab.

Tasks

The project will be accomplished through the following tasks. Basic project completion is at Task 6. Hopefully you will press pass this.

- Task 1: Download, run and familiarize yourself with the Matlab SIFT (0.3M) code.

- Task 2: Read background papers in SIFT original (0.5M) and current (0.5M). Also read Fergus et al (3.6M) and background Weber (0.8M)

- Task 3: Collect PTZ data and use this to find a recognition key stable under zooms, perspective, lighting and small camera movements. We might have to explore pre-normalization schemes for lighting such as in (or make use of) the "improve SIFT" project.

- Task 4: Use the method of Fergus et al, but with SIFT features to create Gaussian models of feature locations to allow for movements of trees and to wash out transient objects (people, cars etc) from model.

- Task 5: Create a Gaussian-SIFT database of the camera scene that associates the PTZ parameters (pivot, tilt, zoom) to the scene locations viewed.

- Task 6: Blank out known features so that only new, moving objects in the scene are detected.

- Task 7: Use method of Fergus but using SIFT to learn and recognize the detected moving objects.

- Task 8: Label and track people.

Pre-requisites

At least one of the team members should be a decent C/C++ coder to create a real time control/recognition using the camera (or else a Matlab whiz who can do everything inside of Matlab).

Project Contact

![]()

Project Status

Gary Chern Jared Starman, and Paul Briant.

Midterm Report

submitted

Final Report

submitted

* David G. Lowe, "Object Recognition from Local Scale-Invariant Features", ICCV'99

** R. Fergus, P. Perona A., Zisserman, "Object Class Recognition by Unsupervised Scale-Invariant Learning", 2003