Project P11:

Learning Optical Flow from Control Commands of a Mobile Robot

Project Goal

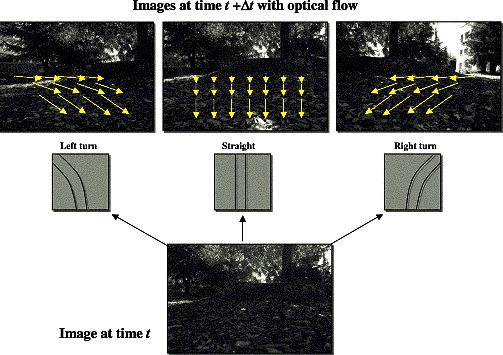

As part of the DARPA Learning Applied to Ground Robots (LAGR) Program, the Stanford AI Lab is developing software that would allow an autonomous robot to navigate off-road terrain quickly using computer vision. Our approach involves using a reverse optical flow algorithm paired with short-range sensor readings to identify objects or terrain patches as either obstacles to be avoided or terrain types that allow high speed at distances where this information is useful. Once pixel regions in the current camera image have been ranked for traversibality, a control algorithm must choose robot velocity and direction in such a way that obstacles are avoided and the goal is reached as quickly as possible. An intuitive approach to this problem without resorting to a full 3d map of the world is to learn how, given the robot's commanded velocity and direction, the optical flow in the image behaves. This information would then be used to direct the robot in such a way as to travel over desirable image patches and avoid perceived obstacles. The following image demonstrates how optical flow varies as a function of the robot's movement:

The LAGR robot is equipped with two stereo camera pairs (producing four simultaneous images). One hurdle in this project is to choose a method for integrating information from the four views in such a way that the most use is made of the 110 degree total field of view.

Project Scope

This project will use the concepts of feature detection and feature tracking as they relate to determining the optical flow between two consecutive frames. In order to use information from possibly all four camera images, concepts such as image transforms, epipolar geometry, and image mosaicing will be useful.

Tasks

Investigate methods for incorporating information from all four cameras (for example, creating a mosaic from the two extreme camera views or parallelizing image operations)

Develop or apply existing optical flow algorithms.

Develop a histogram or Markov Random Field data structure to model the optical flow as a function of robot control commands

Extension: develop control algorithm to drive vehicle based on optical flow.

Project Status

John Rogers (jgrogers at stanford),

Joseph Baker-Malone (jmalone at stanford),

Christie Draper (cdraper at stanford)

Negin Nejati (negin at stanford)

Point of Contact

David Lieb, Andrew Lookingbill,

and Hendrik Dahlkamp

Midterm Report

not yet submited

Final Report

not yet submitted

|